Google classroom

AI Grading Assistant Feature Add-on

An AI Grading Assistant feature add-on concept for Google’s Google Classroom helps lighten the grading load for English teachers.

Responsibilities

UX Researcher, UX Designer, UI Designer

Industry

Ed Tech

Date completed

Problem overview

English teachers are overwhelmed by the task of grading student writing, which often adds hours on top of an already full work day.

Proposed solution

An AI Google Classroom feature-add-on that allows teachers to provide timely and effective student feedback while maximizing their time and energy.

Background

Addressing Teacher Burnout

The teaching profession for TK-12 teachers has experienced an attrition rate of about 8% for the past 60 years. Since the pandemic, attrition rates have doubled in some states. According to the US Department of Education, 45% of US public schools had at least one vacancy by the end of October 2022 and over 100,000 classrooms are staffed by people lacking qualifications.

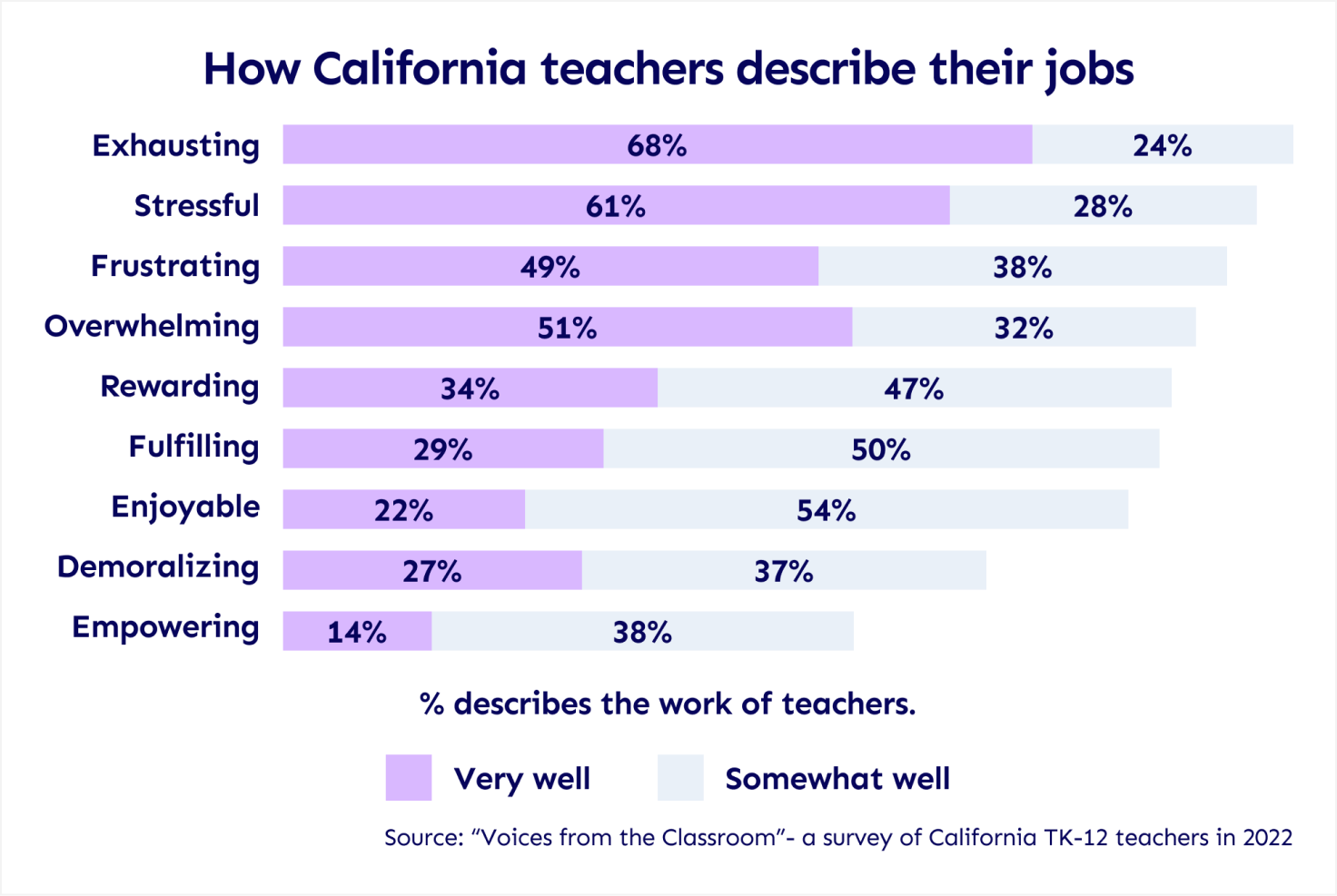

Findings from a 2022 survey of California classroom teachers revealed more about how teachers describe their jobs. Exhaustion, stress and overwhelm rank high with little return in feelings of enjoyment, fulfillment and empowerment.

As a former high school English teacher, I am all too familiar with the seemingly impossible demands put on classroom teachers and can attest to the experience of burnout.

I began this project wanting to devise a solution that could help make the workload of a high school English teacher more sustainable. And I chose to focus on one aspect of the job that felt particularly hard: grading student writing.

Discovery

Guilty: Grading falls to the bottom of the daily to-do list

Ask any high school English teacher and they would agree: grading kinda sucks. Especially when it comes to grading student writing.

Interviews with three high school English teachers revealed some surprises. I learned that teachers enjoy reading student writing and all teachers view grading as an integral part of teaching. But, however hard they try, teachers can’t seem to get grading done within the 8 hour workday. One teacher mentioned that she often doesn’t get home until 7PM and can’t get to grading until 9PM.

I dug deeper to get to the root of the problem.

Interviews: Grading attitudes and trends

In my interviews, I focused my questions on what the teacher’s day-to-day looks like and on understanding how teachers give students feedback in the classroom. They revealed their attitudes, strategies, pain points and tools they use in the grading process.

Goals

Connect with students: Reading and responding to student writing helps teachers connect to students.

Learn about students: Good teaching means knowing your students: what they get, what they don’t.

Support student growth: When students get individualized feedback on their writing, they tend to improve.

Methods

Immediate feedback: Teachers aim to give frequent feedback on smaller chunks of work.

Rubrics: Rubrics help by giving students a focus on what they need to work on and teachers a criteria to evaluate.

Feedback loop: Forming an ongoing conversation about what students get and don’t get is good practice.

Frustrations

Cognitively demanding: Grading includes reading, comprehending, evaluating, responding.

Takes too long: Grading papers for multiple classes takes up a lot of time outside the standard work day.

Emotional toll: The demands of grading make it daunting, which heightens feelings of stress and guilt.

Grading is super important, but super taxing

The evidence pointed to a key finding: the cognitive load and volume of work makes grading student writing overwhelming. Teachers are reluctant to grade because they know it will take a lot of time and energy to get through all the student work. It inevitably falls to the bottom of their to-do list, even though know how important it is. The mismatch between what they can feasibly do and what they wish they can do leads them to feel guilty and disempowered.

Competition Landscape

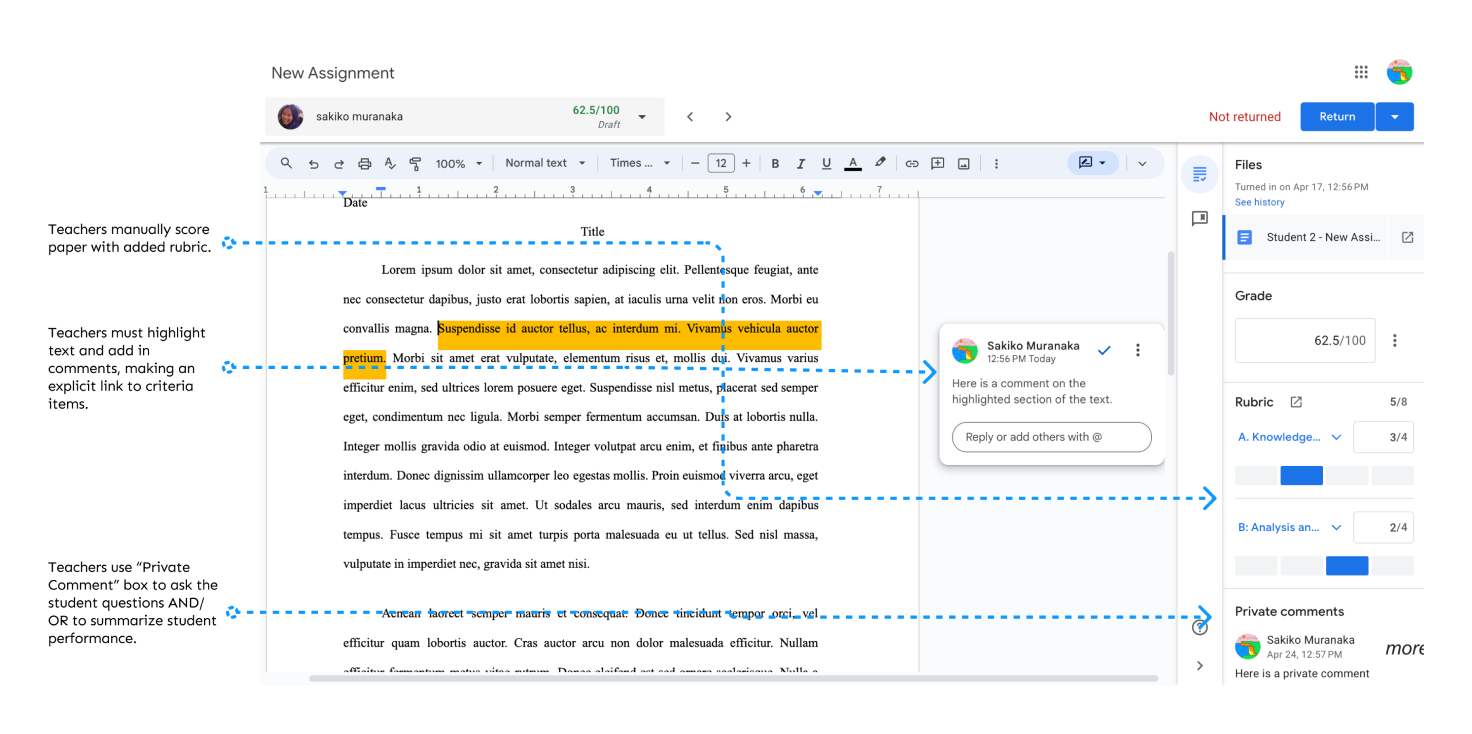

Can we enhance a common ed tech tool?: Google Classroom Audit

Interviews revealed one common ed tech tool that teachers use to assign, track and grade student writing: Google Classroom. According to Google’s blog, in 2021, Google Classroom served 150 million students, educators and school leaders around the world, a 275% increase from the previous year.

I decided to audit Google Classroom to assess its strengths and weaknesses as a feedback tool, based on interview findings about teacher needs.

Adding a Rubric

Adding a Rubric is easy to do.

Grading with a Rubric

Comments and score need to be input manually.

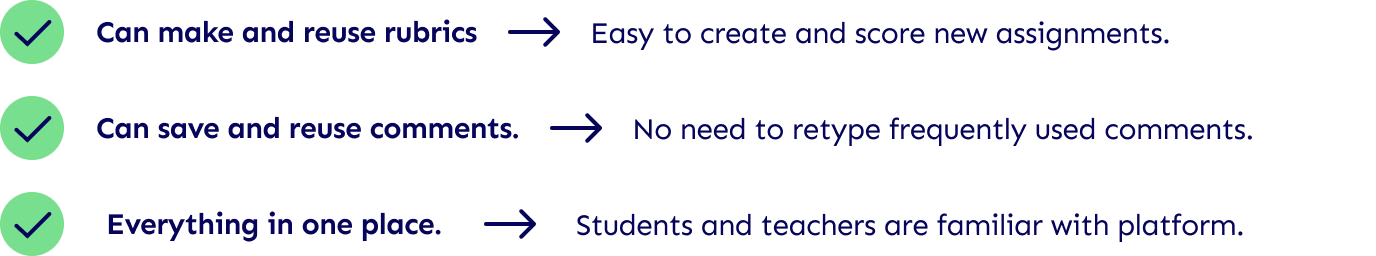

Strengths

Weaknesses

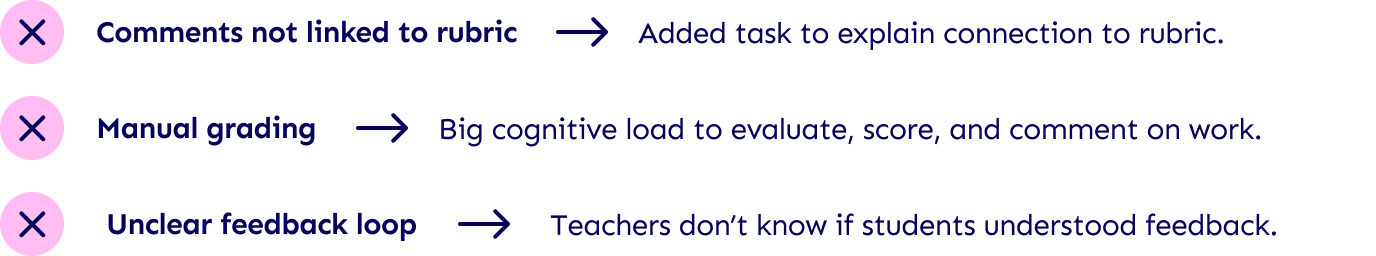

Competitive Analysis: Exposing Opportunities

I investigated other frequently used ed tech feedback tools to see where those tools excelled and where they fell short.

Findings: No product is optimized to provide quick feedback on student writing

Direct competitor Turnitin has features similar to Google Classroom and none that would lighten the cognitive load of grading or make the process quicker. Gradescope, on the other hand, harnesses AI technology to allow for quick, thorough feedback but is optimized for math and sciences, not for humanities. It also requires learning a new platform.

Indirect competitors Grammarly and Kahoot allow for immediate feedback, but lack a focus on essay writing.

Define

High School English Teachers

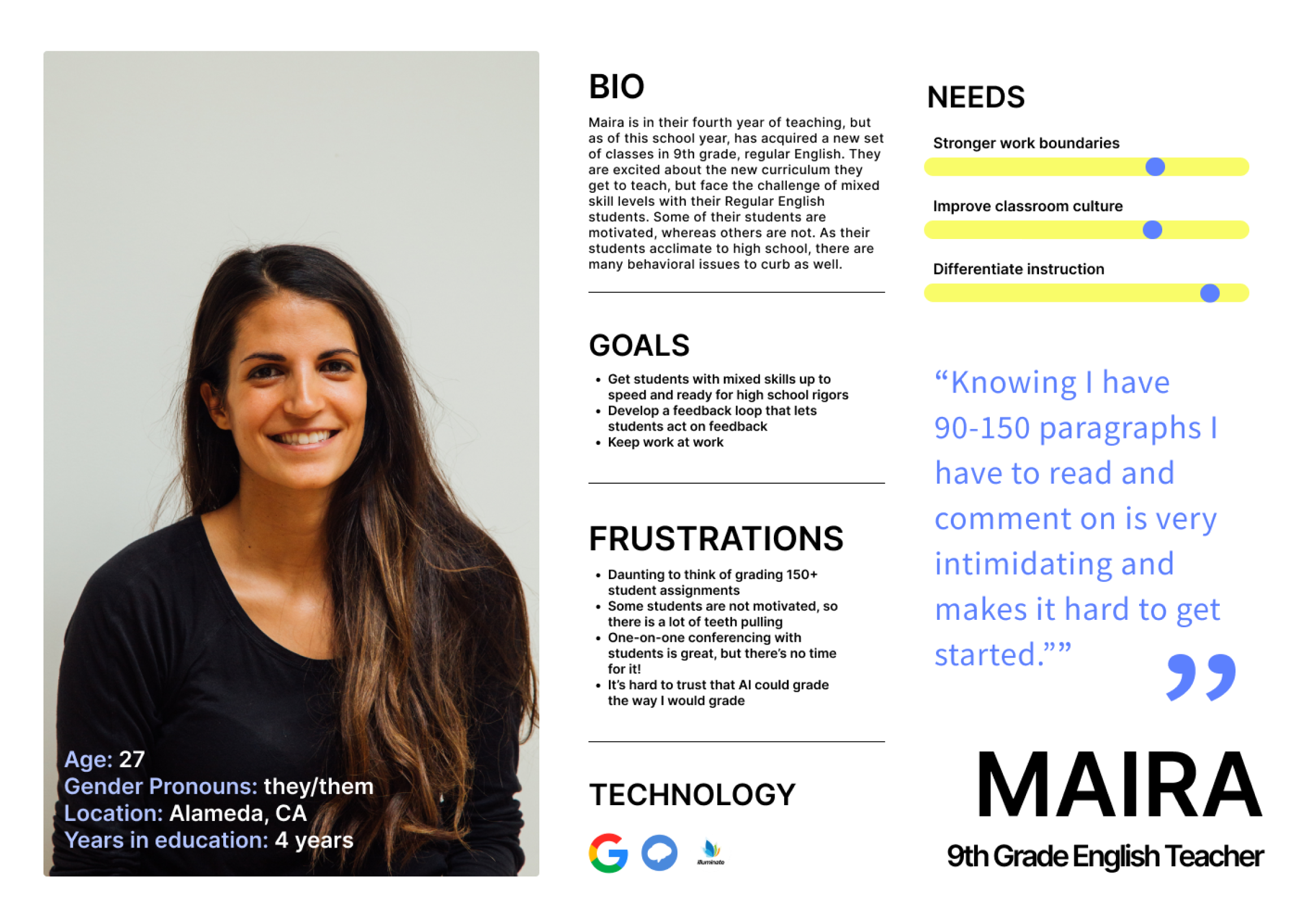

Based on interviews, I determined that although differing student demographics call for varied grading approaches, ultimately, all English teachers struggle with the same thing: grading student writing in a timely way. I created a persona keeping this finding in mind. Then I derived a JTBD statement and HMW to frame the problem.

Persona

Persona

JTBD

English teachers have to grade dozens of written assignments on a regular basis but they feel extremely overwhelmed by the task because of their already overburdened workday .

Ideate

Enhancing Google Classroom

I began brainstorming solutions for a Google Classroom feature add-on that would help lighten the cognitive load of grading. I started with a value proposition chart to determine what features would have the biggest impact. Then, I conducted a secondary interview to determine what to prioritize.

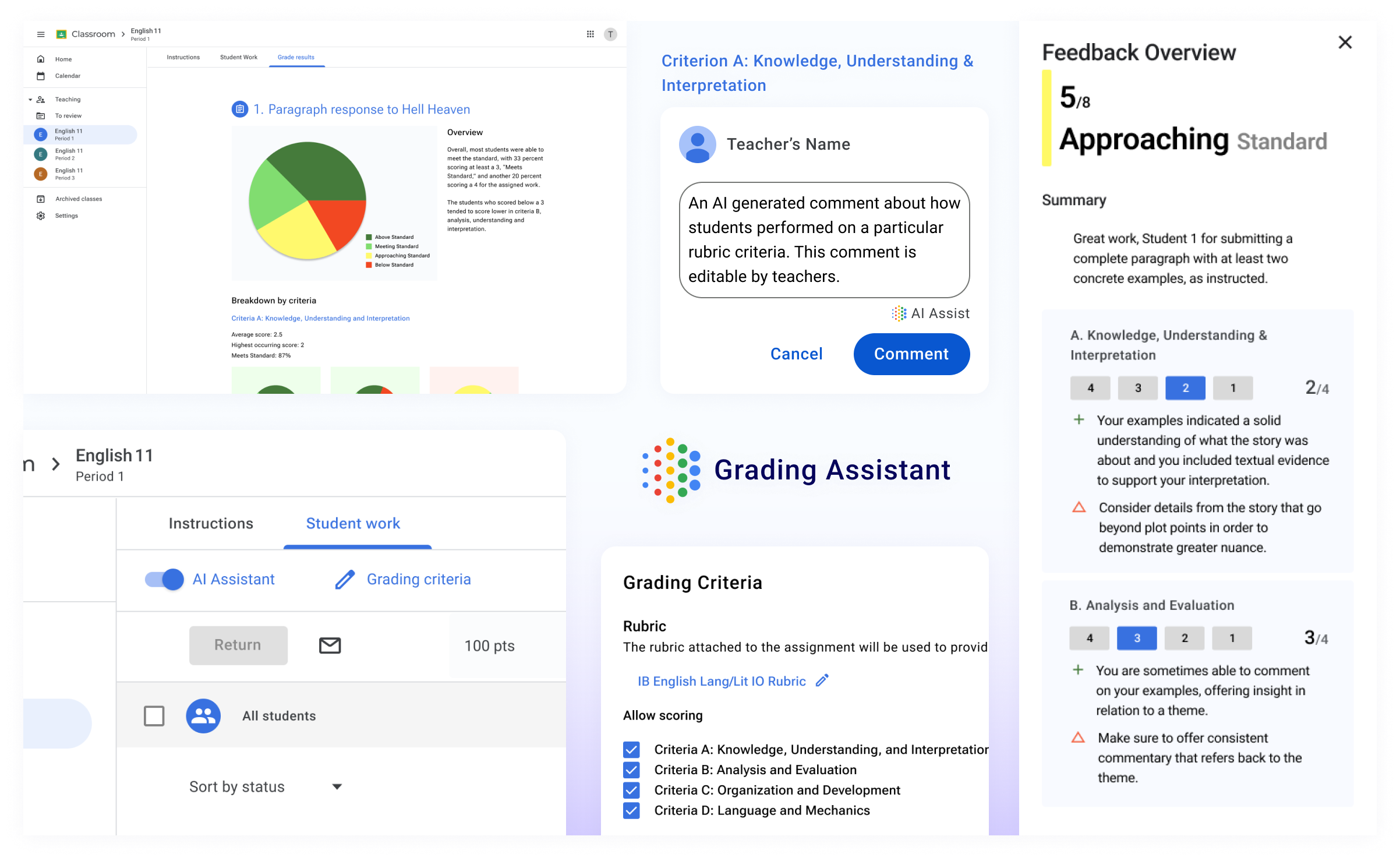

Value Proposition: AI is key

I used a value proposition chart to determine what features would have the most impact, keeping grading best practices in mind. The strongest gain creators involved AI. AI could help generate feedback on student work. It could assist with scoring student work. It could also help generate statistical reports for teachers on student performance. It was clear that AI was key.

Biggest Gain Creators

Immediate feedback

Students don’t need to wait weeks for feedback and can learn more quickly.

Rubric-linked comments

Gives clear focus to students and generates data for performance reports.

Feedback loop

Generates additional prompts to make sure students understand the feedback.

Another user interview…

I hypothesized that certain AI functions would be more useful than others. This led me to conduct an interview with a 4th user. I gave the user a list of 9 possible functions and asked them to select the top three most important features. This helped me narrow my focus for the feature add-on.

- Being able to change AI generated responses sent back to students

- In-text annotations attached to rubric criteria

- Overall summary of student performance

An AI Grading Assistant for Google Classroom

Adjustable comments

Rubric-linked comments

Comment summary

Feedback loop

With the value proposition chart, feature set, and user interviews, I decided on an “AI grading assistant” with the following functions:

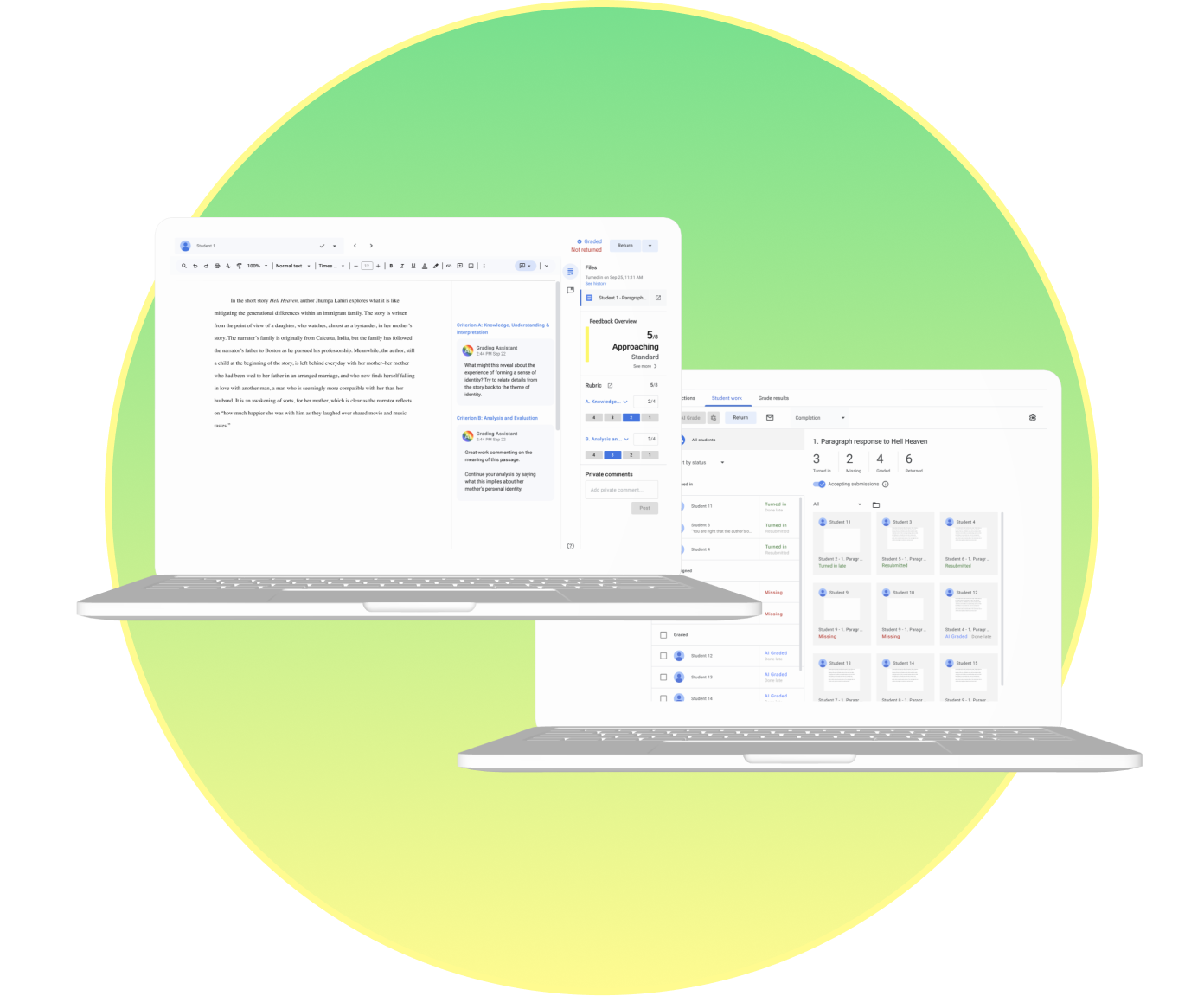

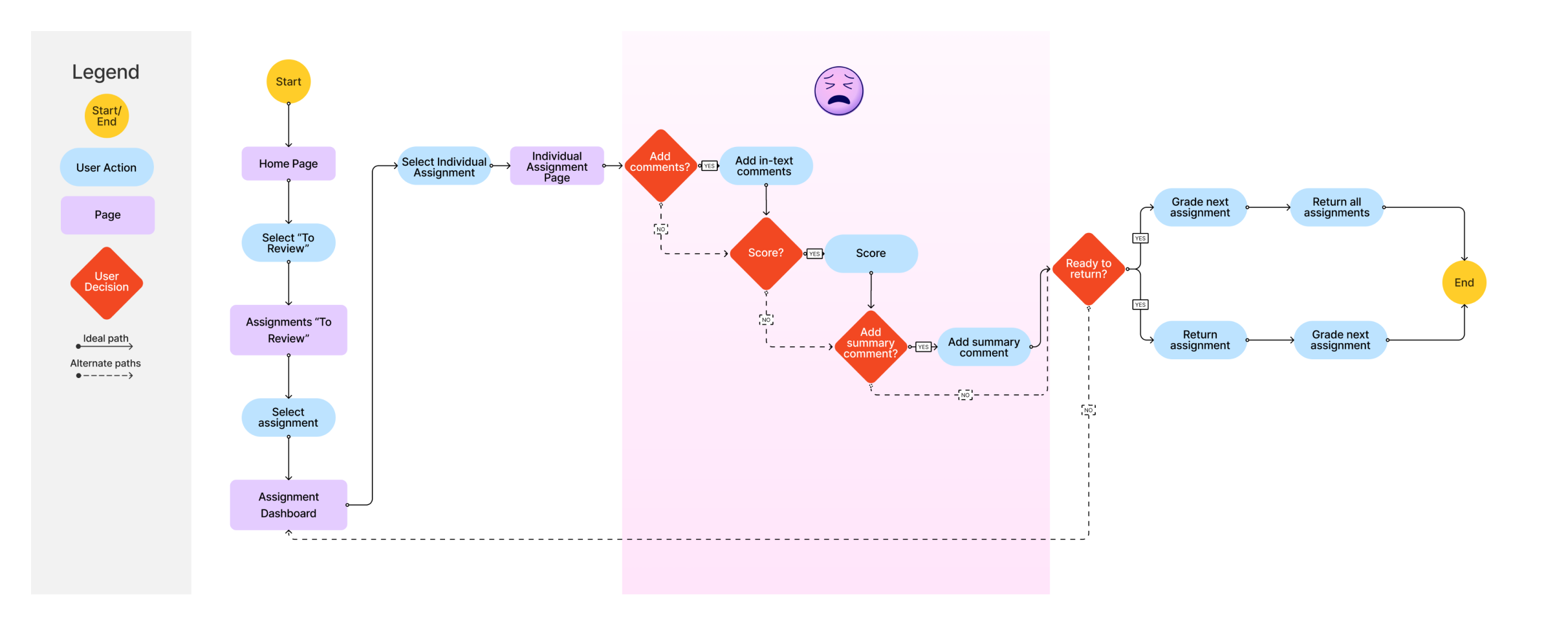

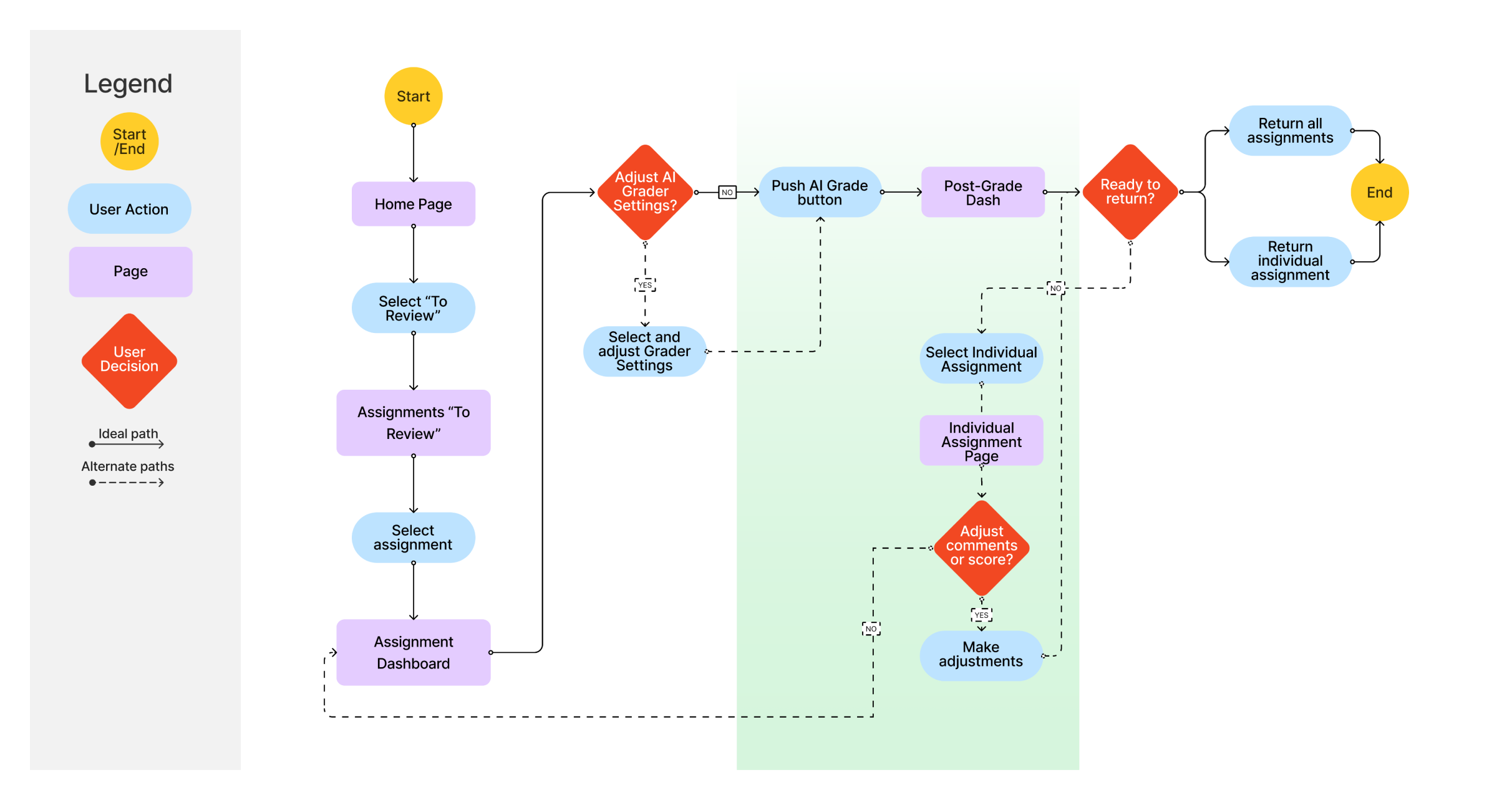

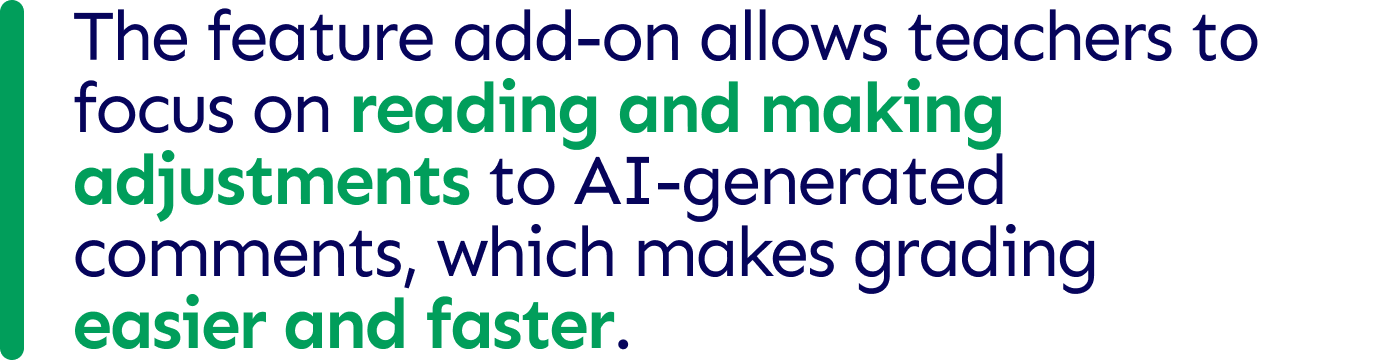

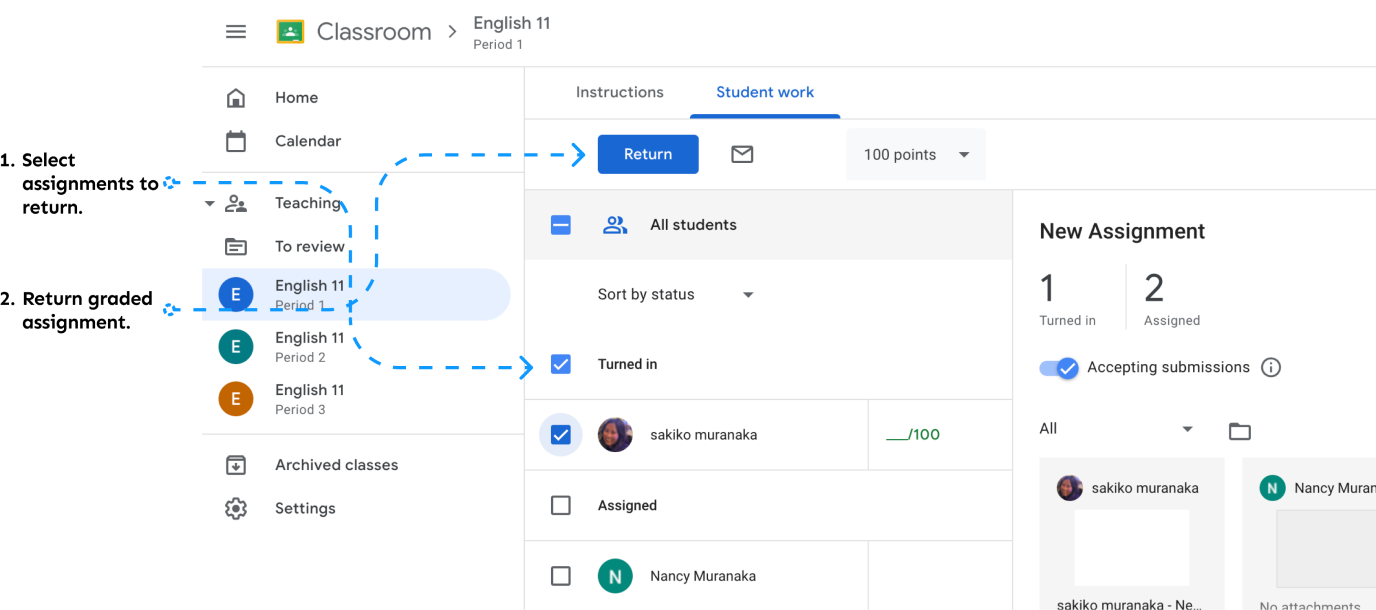

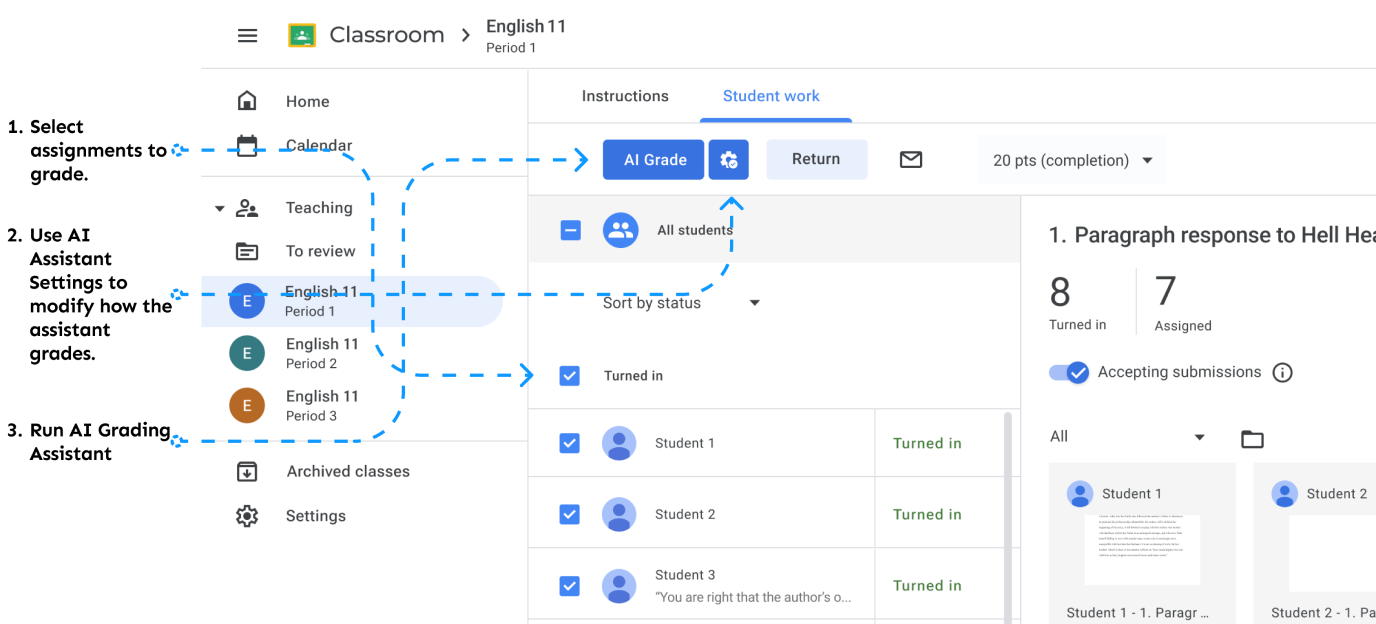

Incorporating the AI Grading Assistant in the User Flow

After considering the features, I determined that the primary flow would revolve around grading (and returning) student work. After mapping out the grading flow for teachers as is, I designed a flow that accounted for the new feature.

Original User Flow

Original User Flow

The user begins by selecting which assignment to grade. Then, they must open each student’s assignment and add comments, score the work, and leave a summary comment as they see fit. They can choose to return each assignment as they grade, or return them all at once.

Pain Points

With Feature Add-on

With Feature Add-on

With the feature add-on, the AI Grading Assistant would be activated by the push of a button on the Assignment Dashboard. Users have the option of adjusting the AI grader settings before running the Grading Assistant. After the grader is run, users can adjust comments or score from the individual student assignment page like in the original flow.

Opportunities

Accounting for the new feature: Design Considerations and Decisions

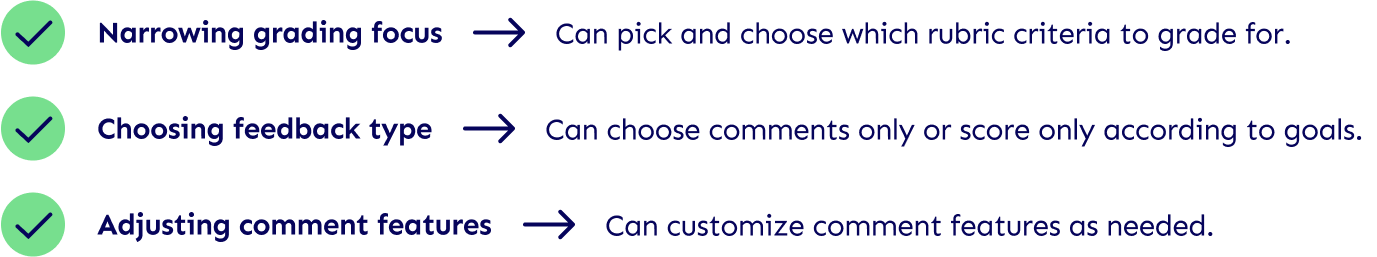

Important considerations for the feature add-on came up as I moved forward with the design. Teachers prioritized customizability and control when it came to AI. For this reason, I made decisions that would allow user input at major junctions.

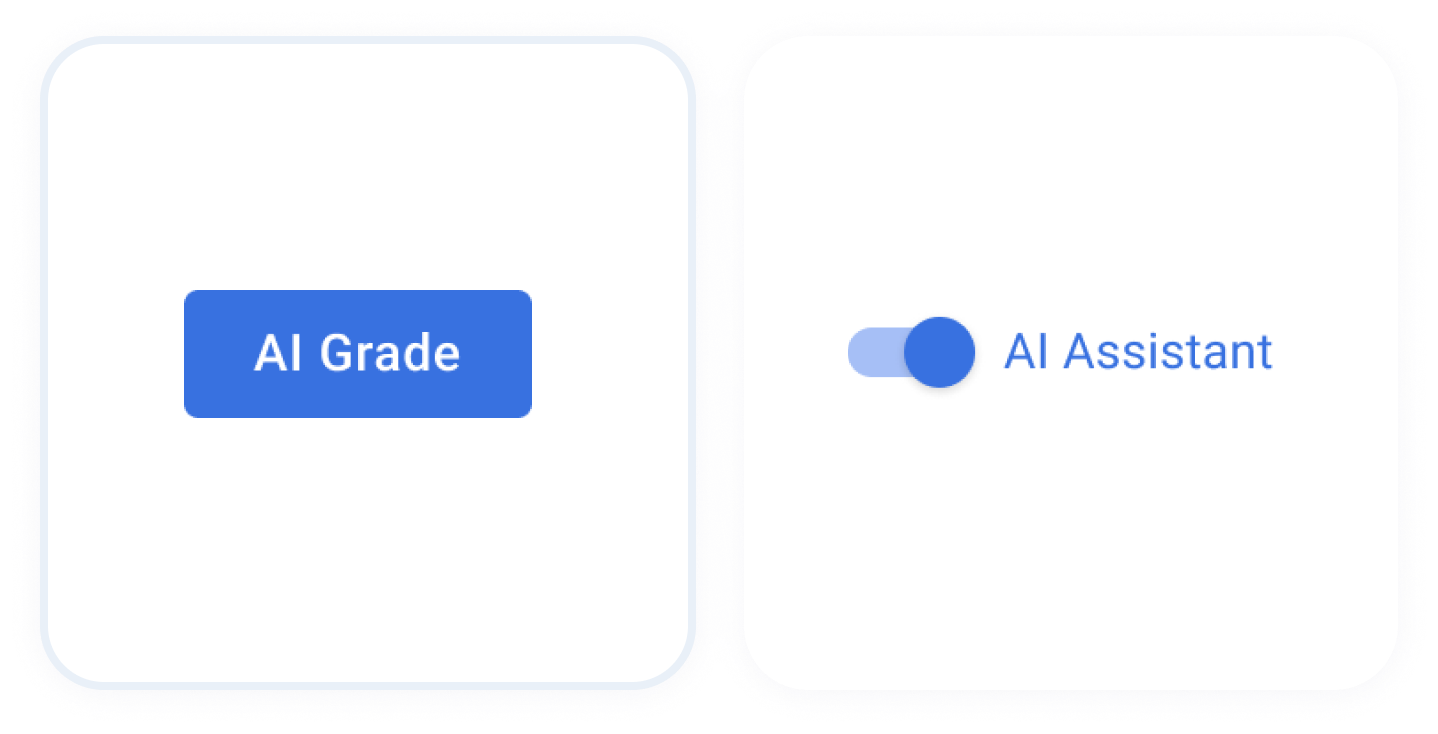

1. How should the “AI assistant” be activated?

I predicted that activating the AI Assistant with a button would give teachers a greater sense of control than a switch. The button gives a clear indication of the action. Teachers choose to use the assistant (or not) for each assignment.

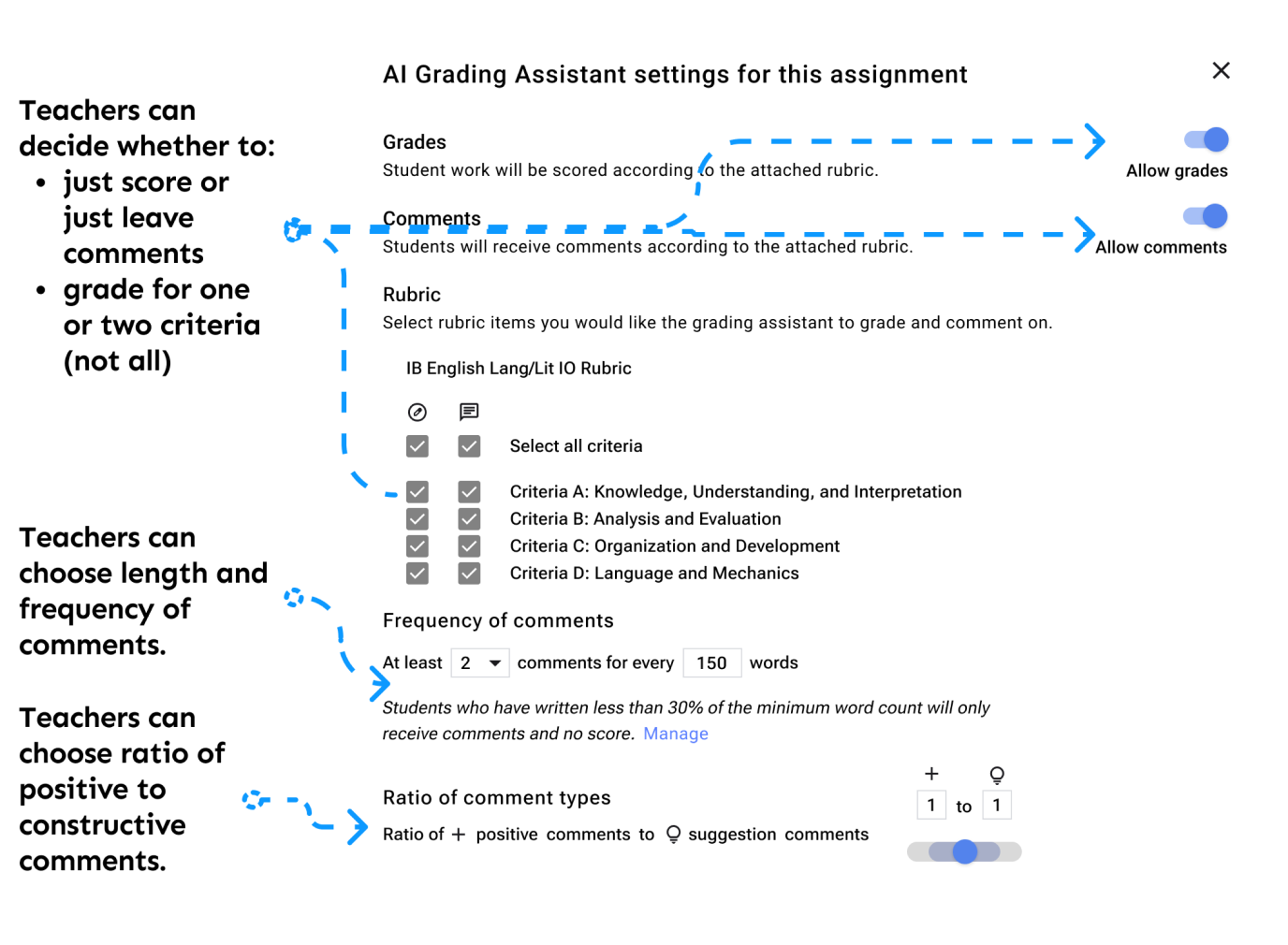

2. What input would teachers want in how the AI assistant grades?

In user interviews, one teacher mentioned her process of assigning shorter written assignments and assessing just one skill. Another teacher discussed how some students get hyper-fixated on their score (and not the actual feedback).

I decided to include settings for the AI assistant that would mimic a teacher’s own considerations when grading.

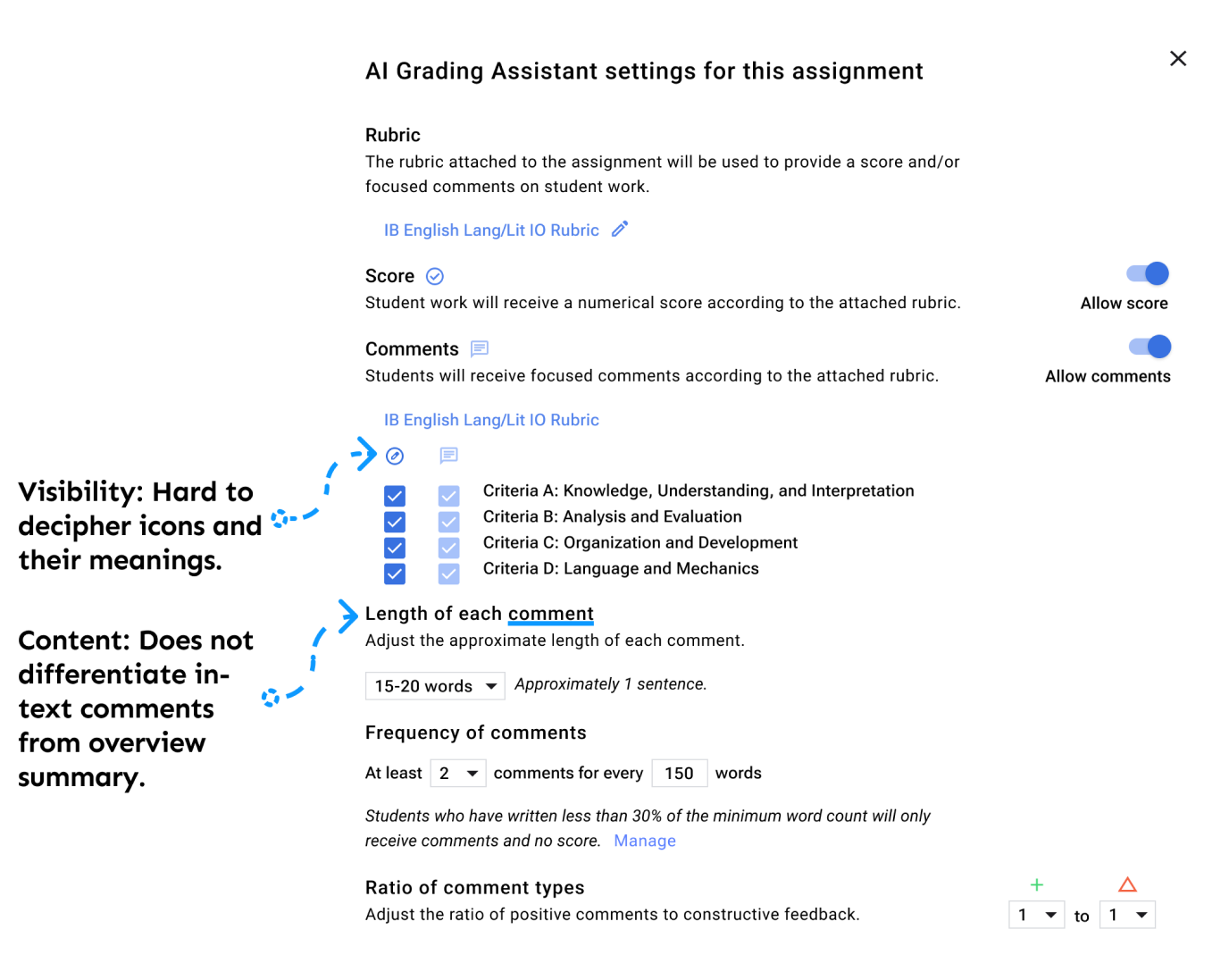

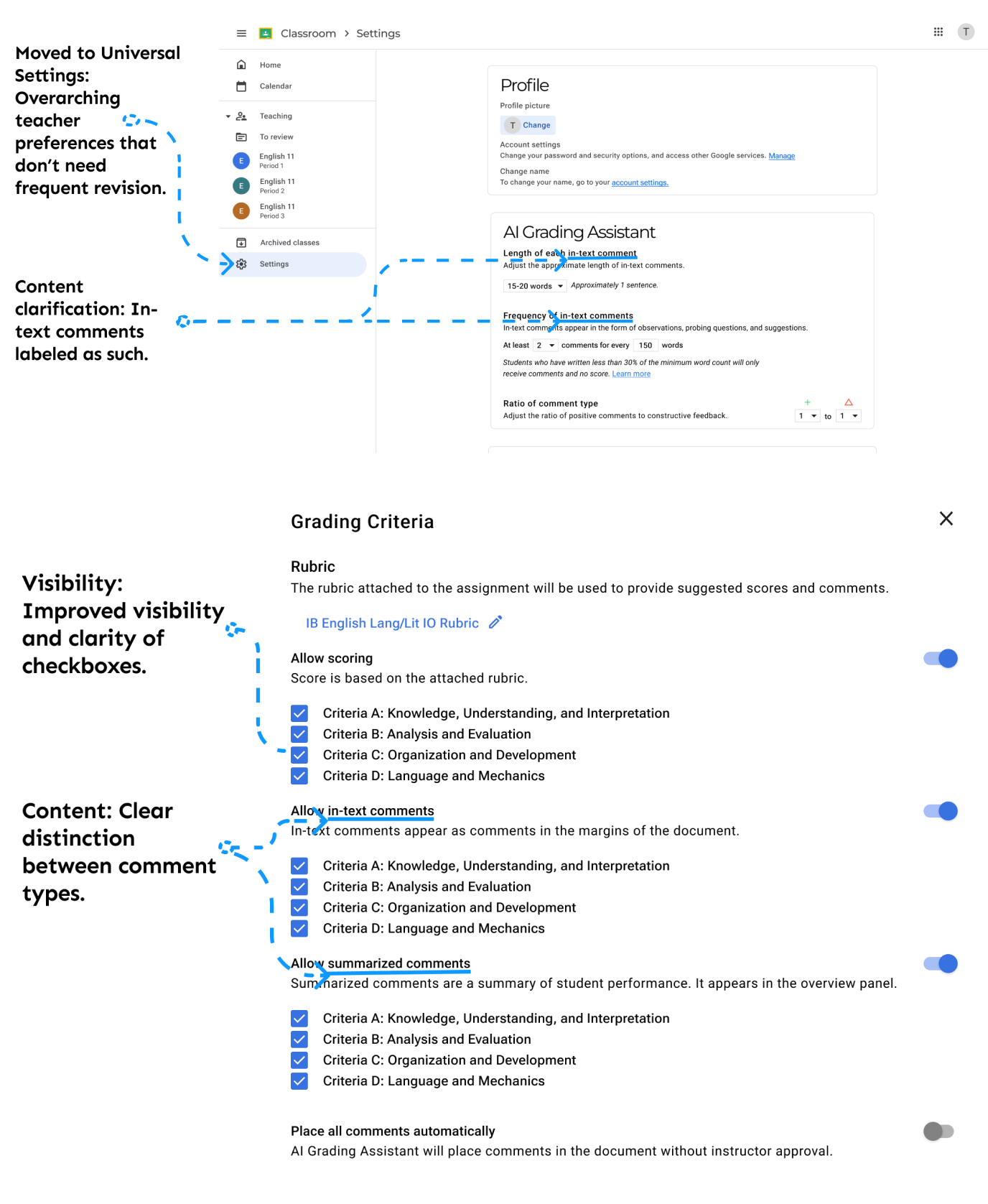

3. AI Assistant settings: Where should it be housed?

Deciding where to put AI Assistant settings in the user flow was important to consider because it coincides with the teacher’s work flow. When would teachers want to adjust settings? (When creating an assignment? When grading?) How regularly would they adjust settings? Where might they expect the button to be?

Original Assignment Dashboard

Teachers assign work, students submit work, and teachers open and grade submitted work from the Assignment Dashboard.

Assignment Dashboard with Feature Add-On

I decided that the AI Assistant settings should go next to the “AI grade” button, which users could select before running the AI. By putting the button next to the “AI grade” button, users are prompted with settings when they are ready to grade, which more closely resembles their grading process.

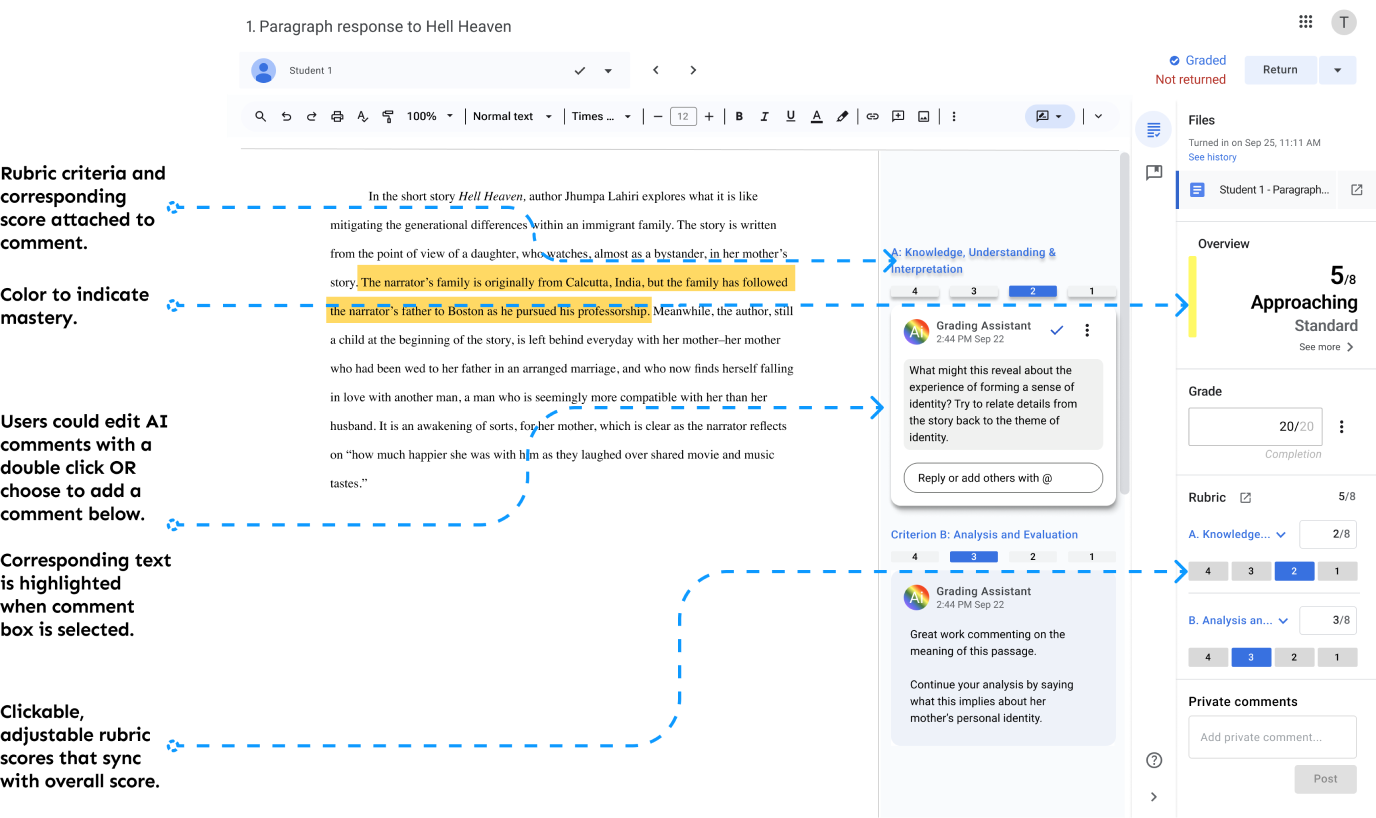

4. What should AI scoring and commenting look like on individual student assignments?

The individual assignment page is where teachers read and evaluate student work. In the feature add-on, I wanted to retain the original look and functionality of the current page, while adding new functions.

Individual Assignment Page

Teachers can interact with AI comments and score the same way they currently do.

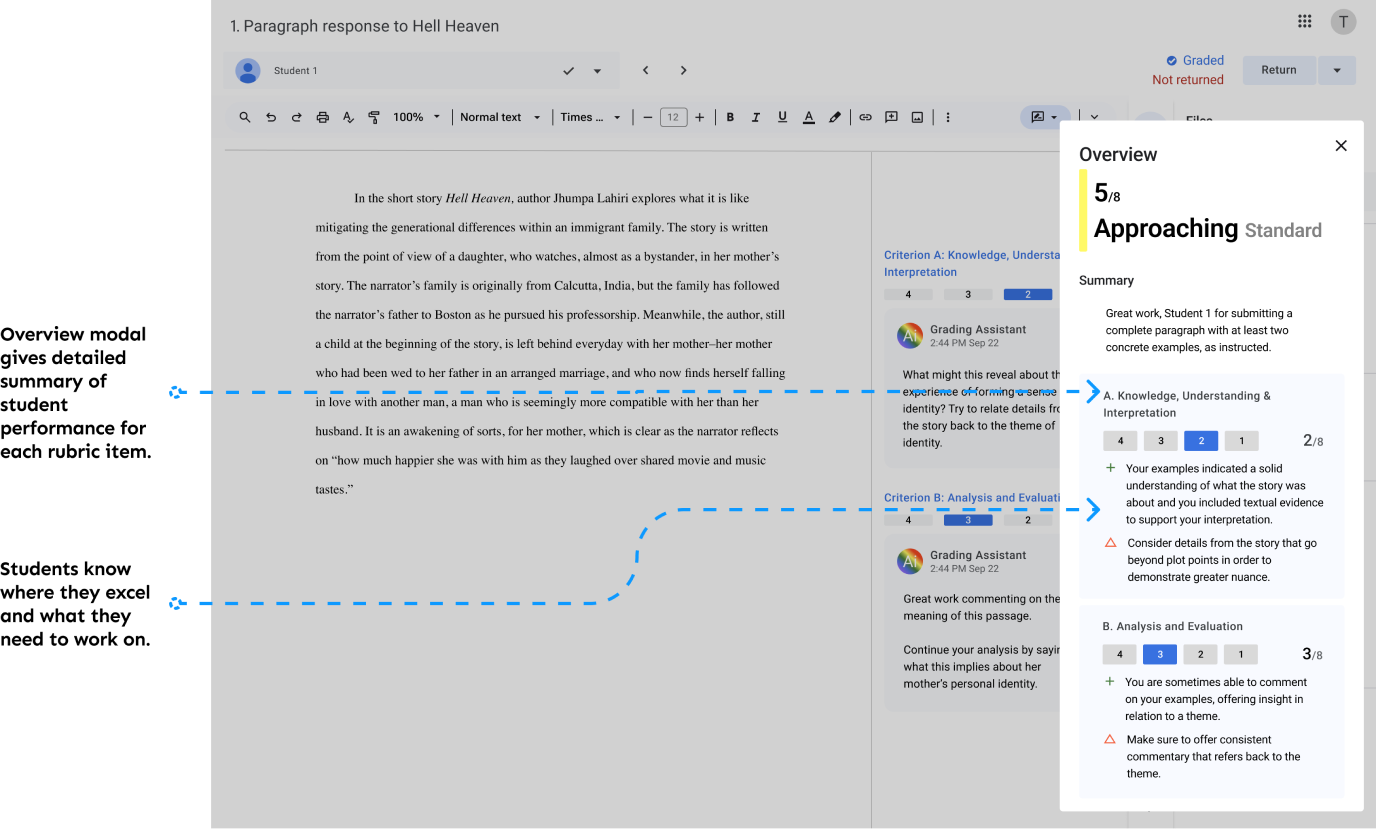

Overview Modal

When teachers grade thoroughly, they include in-text comments as well as a summary, which gives students a clear focus moving forward. The overview modal, accessible from the side panel of the individual assignment page, serves this purpose.

New Features

Deliver

Changing teachers’ relationship with AI

Usability test results

In my usability tests, I was eager to test how intuitive the new feature would be for users. In order for teachers to want to use this feature, it should be easy enough to use, even with the initial learning curve. It should also align with their expectations of a Google product.

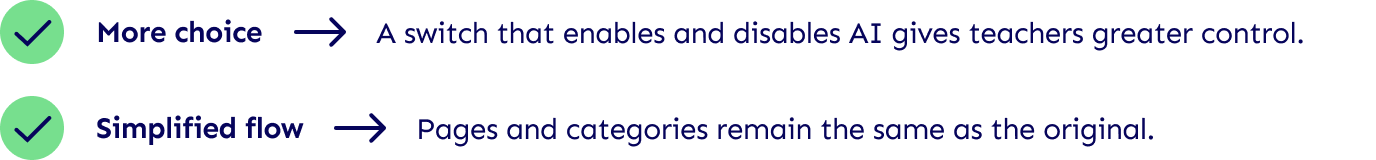

What worked

Brand Consistency

Users were able to complete all tasks and responded well to the added feature’s UI.

Comment- Rubric Link

Users found the comments linked to the rubric criteria to be a helpful addition.

Sufficient teacher input

Users felt that categories in “Grader Settings” aligned with their own grading.

Summarized Results

Users loved seeing a summary of individual and whole-class student performance.

Overall, the feature add-on was met with applause. Users found the feature easy to use and consistent with Google Classroom’s branding/interface. The categories in “Grader Settings” were aligned with their own grading considerations. They loved seeing summarized data—for individual students or for the whole class.

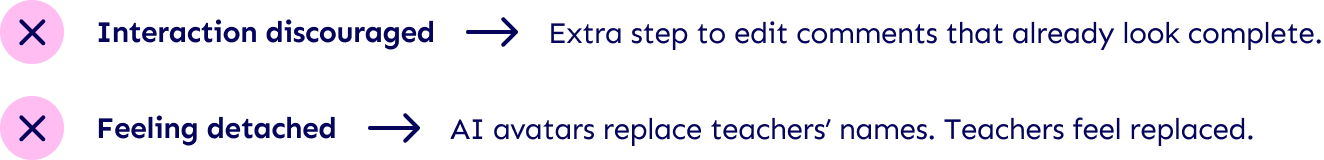

What needs work

Ethical dilemmas

Users struggled with grades that are done without ever seeing an assignment.

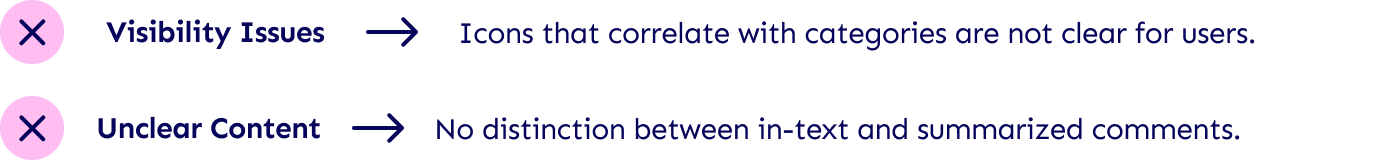

Visibility issues

Users had a hard time distinguishing icons in the settings modal (comments vs. score).

Cluttered interface

Users found a couple pages to be busy and redundant both in function and in UI.

One key concern that was highlighted in usability testing was the ethical dilemma teachers faced while using an AI grader to grade student work. Two users rated their likelihood of using the product at a 5/5. The other two users rated it a 3 and 2. The main hesitation for the latter two users was related to the ethical concerns of AI generated grades and addressing it with students.

Giving power back to teachers…

I deduced that the ethical dilemmas could be smoothed out if we could change how users perceived the role of the AI Grading Assistant. I realized that in the original iteration, the push of the AI Grade button registered as AI replacing the teacher (grading in place of the teacher).

I decided to revisit the idea of a switch to turn the AI Grading Assistant on and off. If teachers could associate the AI as a true assistant, they may have fewer ethical issues with it. For instance, preliminary research indicated that teachers have no qualms using AI to help write emails. Why should it be any different here?

Giving teachers more control and flexibility…

V1 Assignment Dashboard with Button

V2 Assignment Dashboard with Switch

A switch versus a button gives teacher greater autonomy. They could activate the AI to help generate ideas, deactivate the AI, and write in their own grades. The AI still lightens the cognitive load for teachers, while allowing them to feel in control. Additionally, the switch helps maintain the original flow, further positioning the feature as a tool instead of a replacement.

Getting teachers more involved in the process…

V1 Individual Assignment Page with pre-placed AI comments

V2 Individual Assignment Page with “click-in” AI comments

Streamlining settings…

V1 Cluttered and Unclear Settings Modal

V2 Simplified Settings Modal and Added to Universal Settings

Final synthesis

Conclusion

Next Steps

I’d be eager to test new iterations to gauge if teachers’ attitudes towards AI grading have shifted. Regardless of their comfort level, they’ll need to address student AI use. Ongoing testing could reveal if this add-on nets positive gains for teachers and students as AI attitudes evolve.

Allowing teachers to train the AI Grading Assistant on their writing voice and grading style using previously graded work could further ease hesitations. Practically, it may reduce the need for editing AI comments. Emotionally, teachers may feel less guilty about using AI they’ve helped train.

What I would have done differently

In hindsight, I tried to cover too much ground, especially when ideating solutions. Although I focused on users’ pain points, I veered from the core problem: helping teachers overcome grading overwhelm and maximize time/energy. Creating feedback loops or summary reports doesn’t directly address that issue.

I realize I should have narrowed the scope to the main problem and deprioritized extraneous features.

What I learned

This case study gave me important insight into my users. Grading isn’t just physically exhausting - the mental toll is significant too. I learned the importance of getting to the core of a problem and holding onto it throughout the design process.

I also learned that seemingly small UI tweaks can impact how people perceive a product. Switching from a button to a toggle, for instance, could shift users’ views of the the role of that product. Through this project, I gained newfound appreciation for how minuscule details influence the overall experience.

Thanks for taking the time to look through this case study!